Benchmarking AWS Lambda Cold Starts Across JavaScript Runtimes

We built Deno, a modern, simple, secure, zero-config JavaScript (and TypeScript) runtime, to radically simplify web development, and performance is a critical pillar of that mission.

In our benchmarking, we found that running Deno in AWS Lambda consistently has the lowest cold start time compared to other JavaScript runtimes. In this post, we’ll share our methodologies for running said benchmarks, their results, and tips on how to further optimize running production JavaScript with Deno in a serverless environment.

- Why cold starts matter

- Methodologies

- Benchmarks

- Startup time on Linux VM

- Optimizing Deno

- What’s next

Why cold starts matter

A cold start refers to a runtime starting from scratch, so the cold start time is defined as the total time a user must wait to get a response for their first request to an uninitialized runtime. Cold starts have a direct impact on the performance of your applications, especially latency-sensitive applications in real-time gaming, video streaming, high-frequency trading, e-commerce, etc. For instance, if an AWS Lambda function that retrieves e-commerce product details experiences a delay, it will result in a poor user experience and lower conversion rates.

Since higher cold start times impact user experience and potential revenue at a disproportionate rate, an important metric of cold start time performance is tail latency, or high-percentile latency. (While your service might average a 10ms latency, it’s the infrequent 100ms latency that your users will remember.) In our analysis, we will be examining the distribution of cold start times to gauge overall performance.

Methodologies

To assess cold start times representative of real world applications, we used a common serverless cloud setup: Docker container with AWS Lambda. This is a popular approach due to the control, flexibility, and consistency: Docker containers simplify standardizing setup across environments, grant full control over dependencies (including system-level), can be very large (up to 10GB), and can use any runtime version beyond what’s included in AWS Lambda.

There are other ways to run JavaScript on AWS Lambda. AWS also provides its own managed Node runtime optimized for Lambda environment. Despite not being an apples-to-apples comparison, we’ve included benchmarks from that simply as a reference. AWS also created LLRT (Low Latency Runtime) to minimize startup times, but we weren’t able to run the benchmark apps on it, due to incomplete Node.js compatibility support.

Since the majority of the serverless use cases are backend, we benchmarked API servers using three common frameworks: Express, Fastify, and Hono. In each framework, we implemented a simple link shortener. To make them representative of real world use cases, each imports several dependencies, and uses both server-side logic and server-side rendering.

Docker images were created using AWS Lambda Web Adapter, which required no changes to the application code to deploy to Lambda. To further reduce cold start times, we run the program first when preparing the Docker image. This installs dependencies and initializes various runtime caches, which are made part of the Docker image, so they’re immediately available when the program executes in AWS Lambda.

All Lambda functions used a common configuration:

- Region: us-west2

- CPU arch: x86_64

- Memory: 512MB

Note that AWS Lambda also can run on Graviton (ARM) processors, which Deno supports. However, the cold start times on Graviton were measured to be slower across all runtimes benchmarked, including Deno, Node, and Bun (view raw results). As a result, the analysis in this post will focus on x86_64.

The runtimes and their versions that we used in our benchmarks are:

- Deno: 1.45.2

- Node:

- With Docker: 22.5.1

- With Lambda managed Node runtime: 20.14.0 *

- Bun: 1.1.19

*Note that we also benchmarked using the AWS Lambda managed Node runtime, despite not using the same Docker setup as the other setups, as a comparison point.

To simulate a cold start, we updated the Lambda configuration between each

invocation by modifying an environment variable. Cold start latency was observed

by parsing Init Duration in CloudWatch events after each invocation. You can

view the script to run the benchmark

here.

Note that the Init Duration specifically measures the

Lambda Init phase

latency, which may not fully capture the overall end-to-end cold start latency

experienced by the client. Specifically Init Duration does not include the

time it takes Lambda to copy code artifacts to the Lambda sandbox or the network

RTT from the client.

We believe that Init Duration is a reliable proxy to represent cold start

latency for the application servers defined in this blog post because:

- Overall Docker image sizes are relatively small (less than 85MB)

- Cold start time differences between runtimes are dominated by the runtime and

npm modules initialization measured by

Init Duration(see Startup time on Linux VM section for a separate benchmark confirming this) - Simple to measure using Lambda Function URL with no additional dependencies

Benchmarks

We ran each benchmark 20 times for each combination of runtime and framework.

For each iteration, we forced a cold start and measured Init Duration. You can

view the raw results

here.

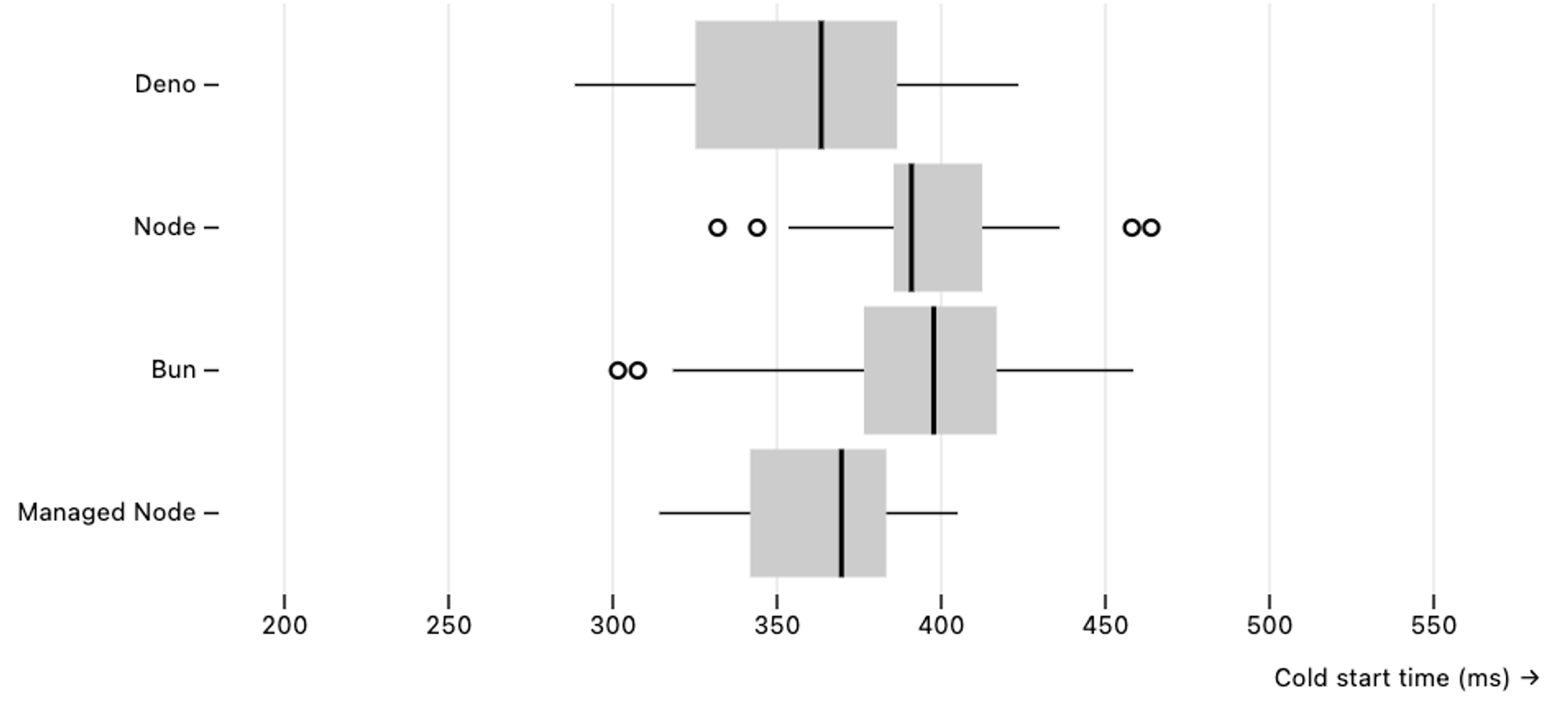

Express

The benchmarks used this Express URL shortener app, which uses Express version 4.19.2.

Deno’s Init Duration times were faster than that of Node and Bun on Docker.

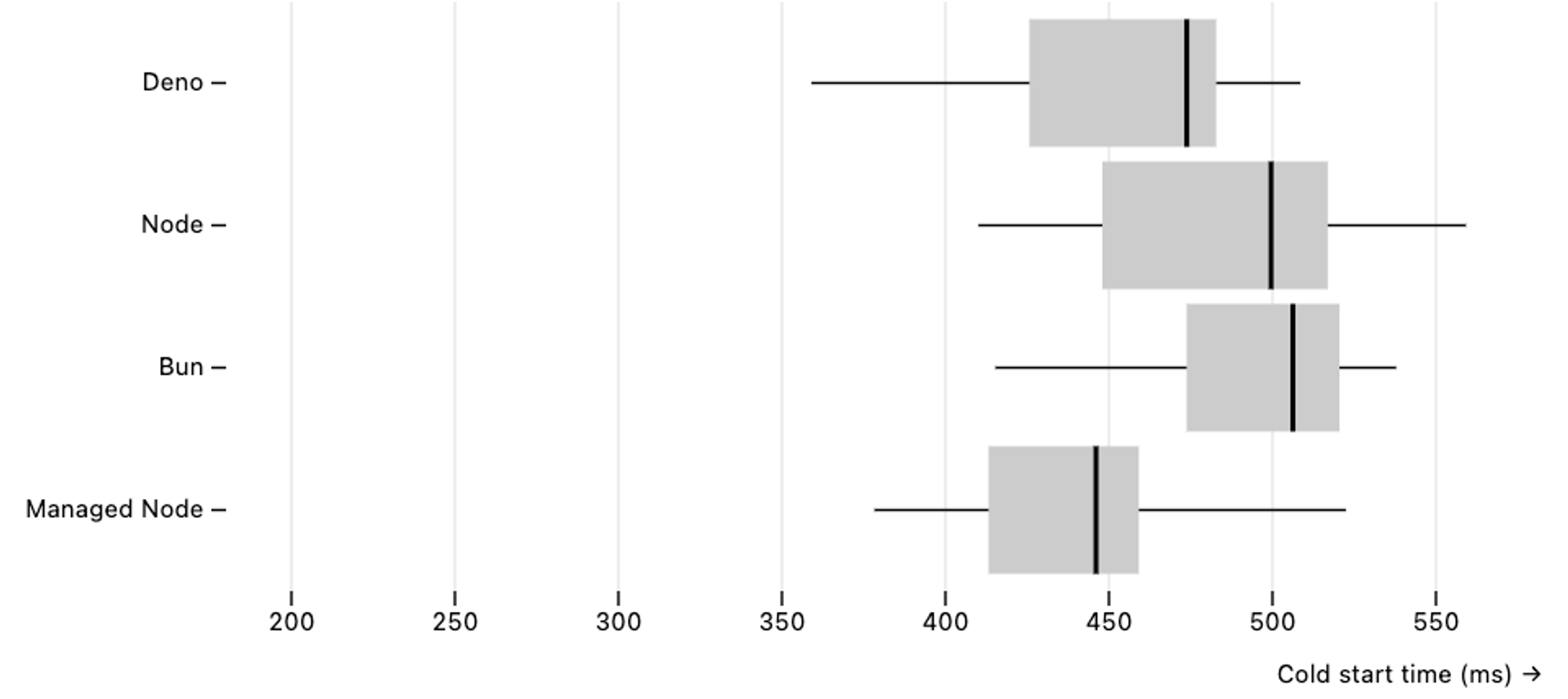

Fastify

The benchmarks used this Fastify URL shortener app, which uses Fastify version 4.28.

Deno’s Init Duration times were faster than that of Node and Bun on Docker.

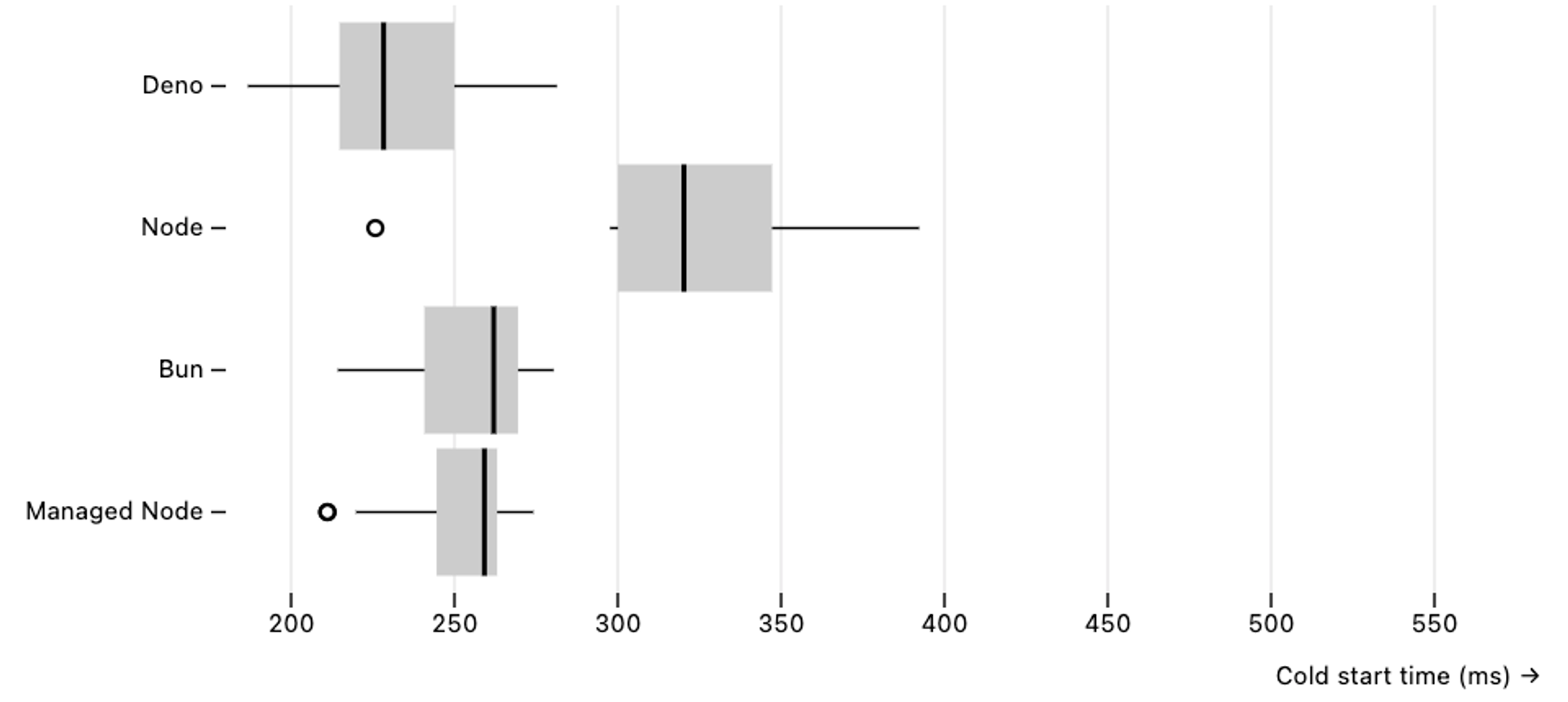

Hono

The benchmarks used this Hono URL shortener app, which uses Hono version 4.4.6.

Deno’s Init Duration times were faster than that of Node and Bun on Docker.

Overall, Deno’s cold start time was consistently faster than Node and Bun when using Docker in AWS Lambda.

Startup time on Linux VM

We wanted to verify our Lambda benchmarks findings by manually running the apps

directly on a Linux VM, and measuring their startup latency. To do this we

provisioned a e2-medium VM in Google Cloud us-west1 region, installed

Deno/Node/Bun runtimes, and started the same set of URL shortener API servers

for many iterations using hyperfine

benchmarking tool. Since we’re only interested in measuring the startup latency,

a small modification was made to the API servers application code to exit

immediately after initialization.

We ran the following command-line for each of the API servers:

hyperfine --warmup 2 "deno run -A main.mjs" "node main.mjs" "bun main.mjs"Deno startup latency was faster than Node and Bun across all benchmarked frameworks.

Express

In our benchmark, Deno’s cold start times in a VM environment were roughly 33% faster than those of Bun and 36% faster than those of Node.

Benchmark 1: deno run -A main.mjs

Time (mean ± σ): 134.9 ms ± 2.6 ms [User: 111.3 ms, System: 32.7 ms]

Range (min … max): 130.6 ms … 139.9 ms 21 runs

Benchmark 2: node main.mjs

Time (mean ± σ): 183.7 ms ± 3.9 ms [User: 187.1 ms, System: 31.6 ms]

Range (min … max): 177.9 ms … 194.5 ms 15 runs

Benchmark 3: bun main.mjs

Time (mean ± σ): 178.8 ms ± 3.0 ms [User: 160.3 ms, System: 38.5 ms]

Range (min … max): 173.0 ms … 183.8 ms 16 runs

Summary

deno run -A main.mjs ran

1.33 ± 0.03 times faster than bun main.mjs

1.36 ± 0.04 times faster than node main.mjsFastify

In our benchmarks of running Fastify in a VM, Deno’s cold start times were about 39% faster than those of Node and 46% faster than those of Bun.

Benchmark 1: deno run -A main.mjs

Time (mean ± σ): 187.3 ms ± 5.8 ms [User: 145.7 ms, System: 52.4 ms]

Range (min … max): 180.4 ms … 204.4 ms 15 runs

Benchmark 2: node main.mjs

Time (mean ± σ): 261.1 ms ± 5.5 ms [User: 285.2 ms, System: 38.4 ms]

Range (min … max): 252.5 ms … 273.5 ms 11 runs

Benchmark 3: bun main.mjs

Time (mean ± σ): 273.0 ms ± 7.0 ms [User: 244.0 ms, System: 48.8 ms]

Range (min … max): 265.0 ms … 292.5 ms 11 runs

Summary

deno run -A main.mjs ran

1.39 ± 0.05 times faster than node main.mjs

1.46 ± 0.06 times faster than bun main.mjsHono

In our benchmark of running Hono in a VM, Deno’s cold start times were about 71% faster than those of Bun and 77% faster than those of Node.

Benchmark 1: deno run -A main.mjs

Time (mean ± σ): 57.6 ms ± 3.4 ms [User: 40.8 ms, System: 22.8 ms]

Range (min … max): 52.9 ms … 65.8 ms 45 runs

Benchmark 2: node main.mjs

Time (mean ± σ): 102.0 ms ± 2.6 ms [User: 93.6 ms, System: 22.9 ms]

Range (min … max): 98.2 ms … 107.4 ms 27 runs

Benchmark 3: bun main.mjs

Time (mean ± σ): 98.6 ms ± 11.3 ms [User: 90.8 ms, System: 35.2 ms]

Range (min … max): 86.8 ms … 117.9 ms 26 runs

Summary

deno run -A main.mjs ran

1.71 ± 0.22 times faster than bun main.mjs

1.77 ± 0.11 times faster than node main.mjsAccording to our benchmarks in a VM setup, Deno still consistently yielded the fastest cold start times when compared to Bun and Node.

Optimizing Deno for a serverless environment

In AWS Lambda, Deno consistently demonstrated the fastest cold start times compared with other runtimes. Deno relies on various runtime caches to maximize startup performance. Some of these are:

- JSR modules

- npm modules

- Module graph

- CJS export analysis

- Transpiled TypeScript

- V8 code cache (bytecode cache)

It’s important that these caches are populated ahead of time during the Docker image creation, so that they’re immediately available when your application executes in AWS Lambda. You can ensure that by adding the following line in your Dockerfile:

RUN timeout 10s deno run --allow-net main.ts || [ $? -eq 124 ] || exit 1This is a trick not many people know. Luckily, in the future that knowledge will

be unnecessary, as it will become part of

deno cache itself.

What’s next

Performance is a critical consideration when it comes to writing production software. As always, Deno is committed to improving performance, just not in the runtime in each minor release, but also across our tooling, such as our recent optimizations in our language server. Stay tuned for more performance and optimization improvements across Deno.

🚨️ Deno 2 is right around the corner 🚨️

There are some minor breaking changes in Deno 2, but you can make your migration smoother by using the

DENO_FUTURE=1flag today.